Welcome to FEAR 2025

The Second International Workshop on Formal Ethical Agents and Robots (FEAR) will be held on 4 November 2025 with co-located tutorial scheduled for 3 November 2025 both at the Kilburn Building, the University of Manchester, UK.

Overview

Recent advances in artificial intelligence have led to a range of concerns about the ethical impact of the technology. This includes concerns about the day-to-day behaviour of robotic systems that will interact with humans in workplaces, homes and hospitals. One of the themes of these concerns is the need for such systems to take ethics into account when reasoning. This has generated new interest in how we can specify, implement and validate ethical reasoning. The aim of this workshop would be to look at formal approaches to these questions. Topics of interest include but are not limited to:

- Logics for morality and ethics

- Knowledge representation of ethical theories and ethically salient information

- Computational modelling of morality and ethics

- Specification of ethical reasoning and behaviour

- Verification of ethical reasoning and behaviour

- Formal modelling of ethical accountability

Schedule and Location

This year we will hold a free one-day workshop with informal proceedings, consisting of short talks and opportunities for

discussion, and a free one-day tutorial that engages conversation in the state-of-the-art machine ethics fields.

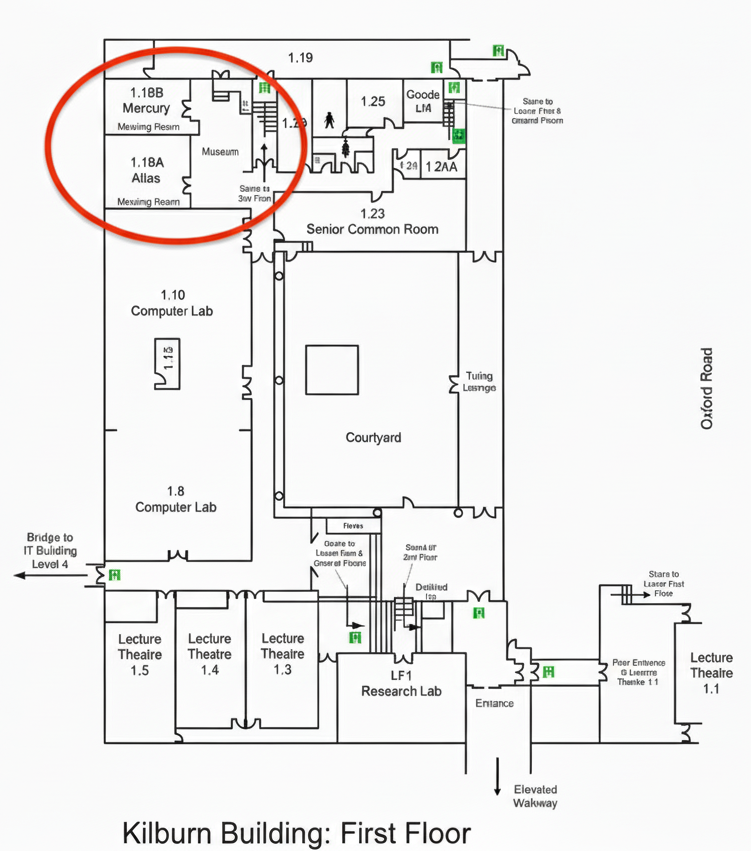

The workshop and tutorial will be held at Atlas room, the first floor of the Kilburn Building, the University of Manchester on the Oxford Road, M13 9PL.

The best way to enter and leave the building is through the entrance on the North side of the building.

See the map of the first floor below.

FEAR will be a hybrid meeting, supporting both virtual and in-person attendance. We strongly encourage in-person attendance.

| 11:00 | Welcome |

| 11:15 | What is Machine Ethics - Marija Slavkovik, University of Bergen |

| 12:15 | Lunch |

| 13:00 | Normative Multi-Agent Systems: An Introduction - Marina de Vos, University of Bath |

| 14:00 | Reward Machines and Norms - Brian Logan, University of Aberdeen |

| 15:00 | Coffee |

| 15:30 | Evaluating Machine Ethics - Louise Dennis, University of Manchester |

| 10:00 | Welcome |

| 10:15 | Keynote Talk: What Virtues Should a Care Robot Possess? Some Simulation Experiments and Human Results - Vivek Nallur, University College Dublin |

| 11:15 | Coffee |

| 11:45 | NAEL: Non-Antropocentric Ethical Logic (Short Paper) - Bianca Maria Lerma and Rafael Penaloza |

| 12:00 | Lunch |

| 13:00 | Keynote Talk: Evolving Normative Systems: Adapt or Become Irrelevant - Marina de Vos, University of Bath |

| 15:00 | Coffee |

| 15:30 | Four Freedoms for Deontic Logic: A Framework for Scalable AI Ethics - Ali Farjami |

| 16:00 | Causal Anticipation for Reason-Based AI Alignment (Short Paper) - Yannic Muskalla |

| 16:15 | Community Discussion |

| 17:00 | Close |

Keynote Speaker

Norms, policies and laws guide behaviour in human societies; as socio‑technical systems increasingly combine human and software agents, they too require explicit, adaptable norms. Early normative frameworks were fixed at design time, but static rules soon become irrelevant. Recent work explores run‑time synthesis and revision of norms to keep pace with evolving environments and stakeholder needs. This talk introduces the Roundtrip Engineering Framework (Morris‑Martin, De Vos & Padget), a dynamic approach in which agents’ experiences feed back into the normative model, enabling runtime modification of rules. We demonstrate its feasibility by encoding the framework in the InstAL normative specification language and employing XHAIL—a symbolic machine‑learning system—for automated norm revision. Finally, we discuss current challenges and potential avenues for addressing these.

Marina De Vos is a senior lecturer/associate professor in artificial intelligence and the director of training for the UKRI Centre for Doctoral Training in Accountable, Responsible, and Transparent AI at the University of Bath. With a strong background in automated human reasoning, Marina's research focuses on enabling improved access to specialist knowledge, the logical foundations of AI systems, explainable artificial intelligence methods, and modelling the behaviour of autonomous systems. In her work on normative multi-agent systems, Marina combines her interests in the development of software tools and methods, drawing from a diverse range of domains including software verification, logic programming, legal reasoning, and AI explainability, to effectively model, verify and explain autonomous agents. Currently, Marina's exploration involves systems that possess the ability to autonomously evolve through external and internal stimuli.

The common consensus is that robots designed to work alongside or serve humans must adhere to the ethical standards of their operational environment. To achieve this, several methods based on established ethical theories have been suggested. Nonetheless, numerous empirical studies show that the ethical requirements of the real world are very diverse and can change rapidly from region to region. This eliminates the idea of a universal robot that can fit into any ethical context. However, creating customised robots for each deployment, using existing techniques is challenging. This talk presents a way to overcome this challenge by introducing a virtue ethics inspired computational method that enables character-based tuning of robots to accommodate the specific ethical needs of an environment. Using a simulated elder-care environment, I will illustrate how tuning can be used to change the behaviour of a robot that interacts with an elderly resident in an ambient-assisted environment.

Dr. Vivek Nallur works on Computational Machine Ethics. He is interested in how to implement and validate ethics

in autonomous machines. What kinds of ethical decision-making can we implement? How can these be checked and

reliably tuned to individual circumstances? What combinations of individually ethical decisions could lead to

un-ethical behaviour? These, by nature, are inter-disciplinary questions, and he is quite interested in

collaborating with folks in the field of philosophy/law/politics etc. He is also a Senior Member of the IEEE. He

is a full voting member, and serve on the IEEE P7008 Standards committee for Ethically Driven Nudging for Robotic,

Intelligent and Autonomous Systems.

Multi-Agent Systems (MAS) are his preferred tool for approaching problems in decision-making, self-adaptation,

complexity, emergence, etc. They lend themselves to extensive forms of experimentation: having all agents follow

simple rules, implementing complex machine-learning algorithms, investigating the interplay of different algorithms

being used at the same time, are all possible with relatively simple conceptual structures.

Submission Information

We will accept three types of paper prepared using the EPTCS LaTeX style.

- Regular papers describing completed research (up to 16 pages in length).

- Short papers describing work-in-progress or directions for future work (up to 8 pages in length).

- Abstracts providing a high-level description of some research published (or submitted/intended for publication) elsewhere (up to 2 pages in length).

Papers should be submitted using OpenReview. The paper type should be included as part of the paper title. Reviews will be single-blind. Please note that OpenReview can take up to two weeks to approve a new profile registration that does not contain an institution email address.

We intend to host informal workshop proceedings on OpenReview.

Important Dates

- Submission Deadline: Sunday, 17th of August 2025, 11:59 UTC-0

- Notification: Thursday, 11th of September 2025

- We will receive papers until September 11. Papers will be evaluated as they are received, but not later than 4 weeks from the date of submission.

- Workshop: Tuesday, 4th of November 2025

Program Chair

Program Committee

- Kevin Baum, DFKI

- Andreas Brännström, Umeå University

- Joe Collenette, University of Chester

- Alex Jackson, Kings College London

- Aleks Knoks, University of Luxembourg

- Simon Kolker, University of Manchester

- Vivek Nallur, University College Dublin

- Samer Nashed, University of Montreal

- Maurice Pagnucco, University of New South Wales

- Luca Pasetto, University of Luxembourg

- Jazon Szabo, Kings College London

- Maike Schwammberger, Karlsruhe Institute of Technology

- Dieter Vanderelst, University of Cincinnati

- Gleifer Vaz Alves, Federal University of Technology – Paraná

- Andrea Vestrucci, University of Bamberg

- Yasmeen Rafiq, University of Manchester