Welcome to FEAR 2025

The Second International Workshop on Formal Ethical Agents and Robots (FEAR) will be held on 4 November 2025 with co-located tutorial scheduled for 3 November 2025 both at the Kilburn Building, the University of Manchester, UK.

Overview

Machine ethics, the field where most work on formal ethical agents and robots is found, is in general concerned with equipping autonomous intelligent agents with explicit moral reasoning capability. This problem offers many challenges and requires many minds form different disciplines. As part of planned activities within the Distinguished International Associates program with the Royal Academy of Engineering we would like to consolidate and enlarge the field of formal methods for machine ethics within the UK. To this end we are offering this tutorial, collocated with the The Second International Workshop on Formal Ethical Agents and Robots (FEAR)

Schedule and Location

TThis year we will hold a free one-day workshop with informal proceedings, consisting of short talks and opportunities for

discussion, and a free one-day tutorial that engages conversation in the state-of-the-art machine ethics fields.

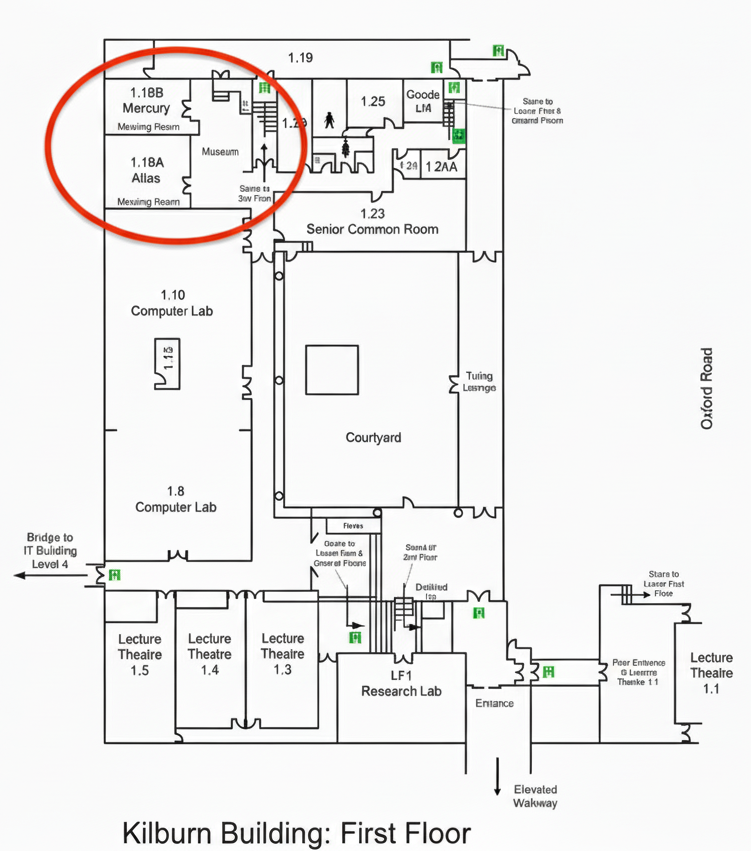

The workshop and tutorial will be held at Atlas room, the first floor of the Kilburn Building, the University of Manchester on the Oxford Road, M13 9PL.

The best way to enter and leave the building is through the entrance on the North side of the building.

See the map of the first floor below.

FEAR will be a hybrid meeting, supporting both virtual and in-person attendance. We strongly encourage in-person attendance.

| 11:00 | Welcome |

| 11:15 | What is Machine Ethics - Marija Slavkovik, University of Bergen |

| 12:15 | Lunch |

| 13:00 | Normative Multi-Agent Systems: An Introduction - Marina de Vos, University of Bath |

| 14:00 | Reward Machines and Norms - Brian Logan, University of Aberdeen |

| 15:00 | Coffee |

| 15:30 | Evaluating Machine Ethics - Louise Dennis, University of Manchester |

| 10:00 | Welcome |

| 10:15 | Keynote Talk: What Virtues Should a Care Robot Possess? Some Simulation Experiments and Human Results - Vivek Nallur, University College Dublin |

| 11:15 | Coffee |

| 11:45 | NAEL: Non-Antropocentric Ethical Logic (Short Paper) - Bianca Maria Lerma and Rafael Penaloza |

| 12:00 | Lunch |

| 13:00 | Keynote Talk: Evolving Normative Systems: Adapt or Become Irrelevant - Marina de Vos, University of Bath |

| 15:00 | Coffee |

| 15:30 | Four Freedoms for Deontic Logic: A Framework for Scalable AI Ethics - Ali Farjami |

| 16:00 | Causal Anticipation for Reason-Based AI Alignment (Short Paper) - Yannic Muskalla |

| 16:15 | Community Discussion |

| 17:00 | Close |

Tutors

This tutorial introduces the object of interest and methodology of the field in broad strokes. It offers an overview of the filed developments including most impactful references, and presents the open challenges. This tutorial is perfect for you who is considering doing research in machine ethics. No background is necessary.

It is not enough that machines behave ethically. We also need to be able to prove that they do. This tutorial focuses on formal verification for ethical behaviour. It lightly introduces formal verification and the dives into the specific developments and challenges of the field. A background in formal verification is appreciated but for those with a curious disposition, the tutorial is available even without it.

Autonomous agents based on reinforcement learning have attracted a lot of interest. What a reinforcement learning agent chooses to do is governed by its reward function. This tutorial introduces an approach to specifying reward functions based on "reward machines". Reward machines allow the specification of rewards based on the history of an agent's interaction with its environment. As such they can be used to specify norms an agent should conform to while learning to perform another task. After briefly introducing reinforcement learning and reward machines, we will discuss how reward machines can be used to learn to comply with norms in a multi-agent setting. Some basic knowledge of reinforcement learning and some familiarity with formal methods is desirable but not essential.

Norms and regulations play a fundamental role in the governance of human society. Social rules—such as laws, conventions, and contracts—not only prescribe and regulate behavior but also allow individuals the autonomy to violate them, accepting the consequences. This balance between guidance and discretion inspires the design of normative multi-agent systems (NorMAS), where software agents reason about norms to make informed decisions rather than being strictly regimented. Normative multi-agent systems provide a framework for modeling and analyzing agent behavior in environments governed by social rules. Norms enable agents to evaluate the consequences of socially acceptable and unacceptable actions, allowing them to choose behaviors aligned with their own goals while considering system-level expectations. This tutorial offers an accessible introduction to normative multi-agent systems. We will introduce the InstAL framework, which supports the modeling of normative frameworks or institutions and compliance monitoring. We will explore topics like norm representation, enforcement, design-time and runtime verification, and the dynamics of interacting and evolving institutions. No prior experience with multi-agents systems or normative systems is required.

Registration Information

This year we will hold a free one-day workshop with informal proceedings, consisting of short talks and opportunities for

discussion, and a free one-day tutorial that engages conversation in the state-of-the-art machine ethics fields.

The workshop and tutorial will be held at Atlas room, Kilburn Building, the University of Manchester.

FEAR will be a hybrid meeting, supporting both virtual and in-person attendance. We strongly encourage in-person attendance.

We encourage PhD candidates and interested academics to register via this

link.

Important Dates

- Deadline: Thursday, 11th of September 2025, 11:59 UTC-0

- Tutorial: Monday, 3rd of November 2025